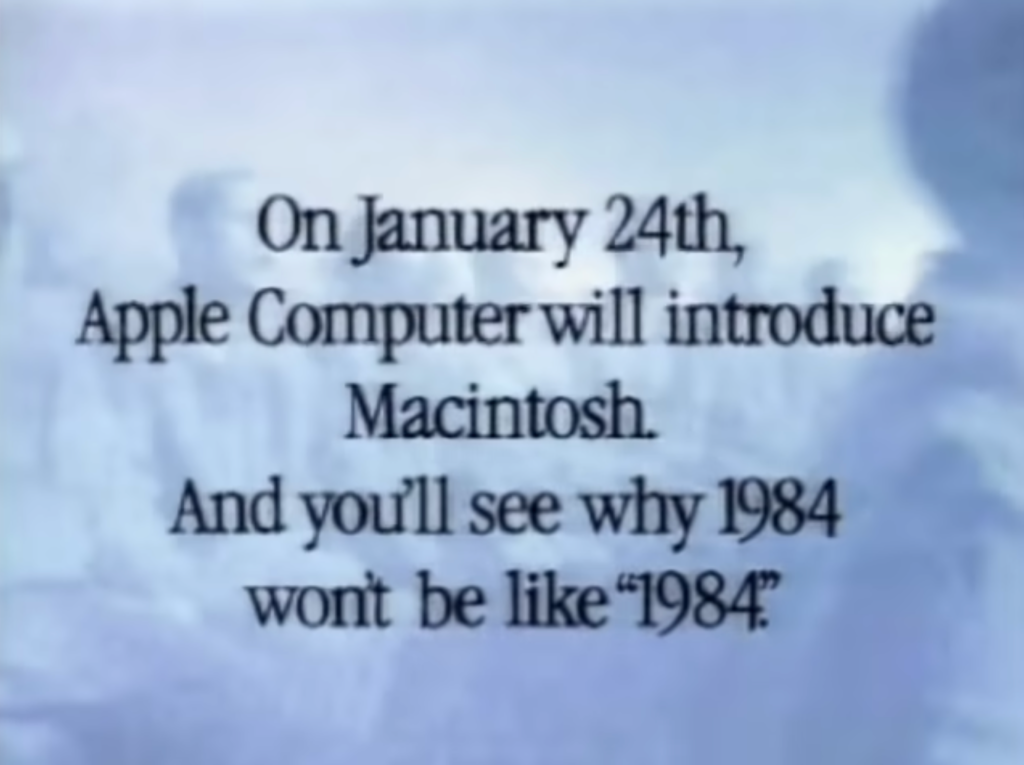

Forty years ago today (24th January 1984) a young Steve Jobs took to the stage at the Flint Center in Cupertino, California to introduce the Apple Macintosh desktop computer and the world found out “why 1984 won’t be like ‘1984’.

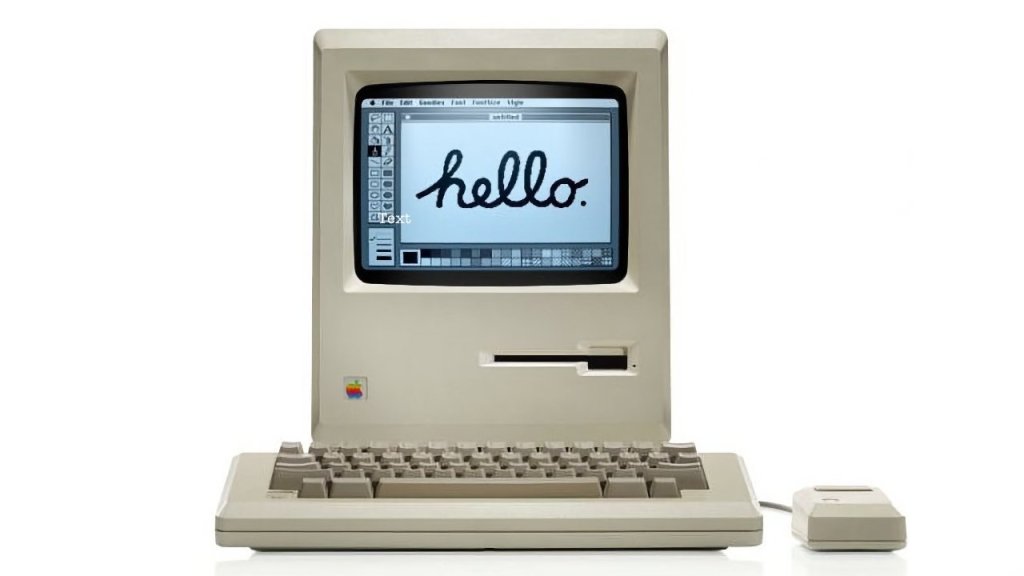

The Apple Macintosh, or ‘Mac’, boasted cutting-edge specifications for its day. It had an impressive 9-inch monochrome display with a resolution of 512 x 342 pixels, a 3.5-inch floppy disk drive, and 128 KB of RAM. The 32-bit Motorola 68000 microprocessor powered this compact yet powerful machine, setting new standards for graphical user interfaces and ease of use.

The Mac had been gestating in Steve Jobs restless and creative mind for at least five years but had not started its difficult birth process until 1981 when Jobs recruited a team of talented individuals, including visionaries like Jef Raskin, Andy Hertzfeld, and Bill Atkinson. The collaboration of these creative minds led to the birth of the Macintosh, a computer that not only revolutionized the industry but also left an indelible mark on the way people interact with technology.

The Mac was one of the first personal computers to feature a graphical user interface (Microsoft Windows 1.0 was not released until November 1985) as well as the use of icons, windows, and a mouse for navigation instead of a command-line interface. This approach significantly influenced the development of GUIs across various operating systems.

Possibly of more significance is that some of the lessons learned from the Mac have and continue to influence the development of subsequent Apple products. Steve Jobs’ (and later Jony Ive’s) commitment to simplicity and elegance in design became a guiding principle for products like the iPod, iPhone, iPad, and MacBook and are what really make the Apple ecosystem (as well as allowing it to charge the prices it does).

One of the pivotal moments in Mac’s development was the now famous “1984” ad , which had its one and only public airing two days before during a Super Bowl XVIII commercial break and built a huge anticipation for the groundbreaking product.

I was a relative late convert to the cult of Apple, not buying my first computer (a MacBook Pro) until 2006. I still have this computer and periodically start it up for old times sake. It still works perfectly albeit very slowly and with a now very old copy of macOS running.

A more significant event, for me at least, was that a year after the Mac launch I moved to Cupertino to take a job as a software engineer at a company called ROLM, a telecoms provider that had just been bought by IBM and was looking to move into Europe. ROLM was on a recruiting drive to hire engineers from Europe who knew how to develop product for that marketplace and I had been lucky enough to have the right skills (digital signalling systems) at the right time.

At the time of my move I had some awareness of Apple but got to know it more as I ended up living only a few blocks from Apple’s HQ on Mariani Avenue, Cupertino (I lived just off Stevens Creek Boulevard which used to be chock-full of car dealerships at that time).

The other slight irony of this is that IBM (ROLM’s owner) was of course “big brother” in Apple’s ad and the young girl with the sledgehammer was out to break their then virtual monopoly on personal computers. IBM no longer makes their machine whilst Apple has obviously gone from strength to strength.

Happy Birthday Mac!