Ten years ago this week (on 9th January 2007) the late Steve Jobs, then at the hight of his powers at Apple, introduced the iPhone to an unsuspecting world. The history of that little device (which has got both smaller and bigger in the interceding ten years) is writ large over the entire Internet so I’m not going to repeat it here. However it’s worth looking at the above video on YouTube not just to remind yourself what a monumental and historical moment in tech history this was, even though few of us realised it at the time, but also to see a masterpiece in how to launch a new product.

Within two minutes of Jobs walking on stage he has the audience shouting and cheering as if he’s a rock star rather than a CEO. At around 16:25 when he’s unveiled his new baby and shows for the first time how to scroll through a list in a screen (hard to believe that ten years ago know one knew this was possible) they are practically eating out of his hand and he still has over an hour to go!

This iPhone keynote, probably one of the most important in the whole of tech history, is a case study on how to deliver a great presentation. Indeed, Nancy Duart in her book Resonate, has this as one of her case studies for how to “present visual stories that transform audiences”. In the book she analyses the whole event to show how Jobs’ uses all of the classic techniques of storytelling, establish what is and what could be, build suspense, keep your audience engaged, make them marvel and finally show them a new bliss.

The iPhone product launch, though hugely important, is not what this post is about though. Rather, it’s about how ten years later the iPhone has kept pace with innovations in technology to not only remain relevant (and much copied) but also to continue to influence (for better and worse) the way people interact, communicate and indeed live. There are a number of enabling ideas and technologies, both introduced at launch as well as since, that have enabled this to happen. What are they and how can we learn from the example set by Apple and how can we improve on them?

Open systems generally beat closed systems

At its launch Apple had created a small set of native apps the making of which was not available to third-party developers. According to Jobs, it was an issue of security. “You don’t want your phone to be an open platform,” he said. “You don’t want it to not work because one of the apps you loaded that morning screwed it up. Cingular doesn’t want to see their West Coast network go down because of some app. This thing is more like an iPod than it is a computer in that sense.”

Jobs soon went back on that decision which is one of the factors that has led to the overwhelming success of the device. There are now 2.2 million apps available for download in the App Store with over 140 billion downloads made since 2007.

As has been shown time and time again, opening systems up and allowing access to third party developers nearly always beat keeping systems closed and locked down.

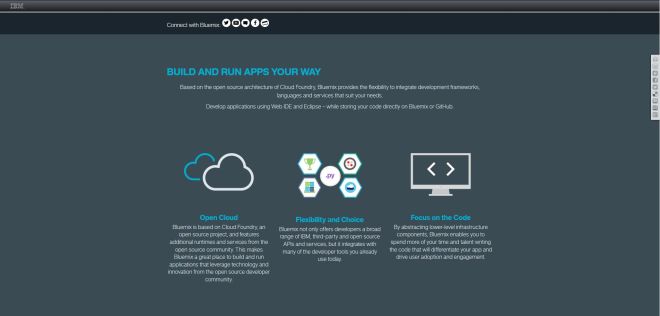

Open systems need easy to use ecosystems

Claiming your system is open does not mean developers will flock to it to extend your system unless it is both easy and potentially profitable to do so. Further, the second of these is unlikely to happen unless the first enabler is put in place.

Today with new systems being built around Cognitive computing, the Internet of Things (IoT) and Blockchain companies both large and small are vying with each other to provide easy to use but secure ecosystems that allow these new technologies to flourish and grow, hopefully to the benefits to business and society as a whole. There will be casualties on the way but this competition, and the recognition that systems need to be built right rather than us just building the right system at the time is what matters.

Open systems must not mean insecure systems

One of the reasons Jobs gave for not initially making the iPhone an open platform was his concerns over security and for hackers to break into those systems wreaking havoc. These concerns have not gone away but have become even more prominent. IoT and artificial intelligence, when embedded in everyday objects like cars and kitchen appliances as well as our logistics and defence systems have the potential to cause there own unique and potentially disastrous type of destruction.

The cost of data breaches alone is estimated at $3.8 to $4 million and that’s without even considering the wider reputational loss companies face. Organisations need to monitor how security threats are evolving year to year and get well-informed insights about the impact they can have on their business and reputation.

Ethics matter too

With all the recent press coverage of how fake news may have affected the US election and may impact the upcoming German and French elections as well as the implications of driverless cars making life and death decisions for us, the ethics of cognitive computing is becoming a more and more serious topic for public discussion as well as potential government intervention.

In October last year the Whitehouse released a report called Preparing for the Future of Artificial Intelligence. The report looked at the current state of AI, its existing and potential applications, and the questions that progress in AI raise for society and public policy and made a number of recommendations on further actions. These included:

- Prioritising open training data and open data standards in AI.

- Industry should work with government to keep government updated on the general progress of AI in industry, including the likelihood of milestones being reached

- The Federal government should prioritize basic and long-term AI research

As part of the answer to addressing the Whitehouse report this week a group of private investors, including LinkedIn co-founder Reid Hoffman and eBay founder Pierre Omidyar, launched a $27 million research fund, called the Ethics and Governance of Artificial Intelligence Fund. The group’s purpose is to foster the development of artificial intelligence for social good by approaching technological developments with input from a diverse set of viewpoints, such as policymakers, faith leaders, and economists.

I have discussed before about transformative technologies like the world wide web have impacted all of our lives, and not always for the good. I hope that initiatives like that of the US government (which will hopefully continue under the new leadership) will enable a good and rationale public discourse on how we allow these new systems to shape our lives for the next ten years and beyond.

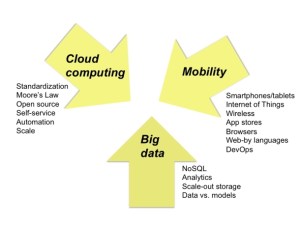

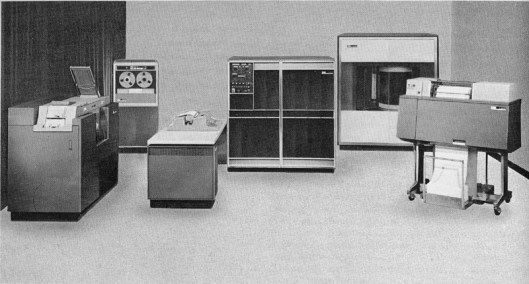

The business of IT has clearly gone through several transformational stages since the modern age of commercial computing began 55 years ago with the introduction of the

The business of IT has clearly gone through several transformational stages since the modern age of commercial computing began 55 years ago with the introduction of the  During the subsequent 50 years we have gone through a number of different ages of computing; each corresponding to the major, underlying architecture which was dominant during that period. The ages with their (very) approximate time spans are:

During the subsequent 50 years we have gone through a number of different ages of computing; each corresponding to the major, underlying architecture which was dominant during that period. The ages with their (very) approximate time spans are: