Today (10th October 2023) is Ada Lovelace Day. In this blog post I discuss why Ada Lovelace (and indeed Mary Shelley who was indirectly connected to Ada) is as relevant today as she was then.

In the summer of 1816 [1], five young people holidaying at the Villa Diodati near Lake Geneva in Switzerland found their vacation rudely interrupted by a torrential downfall which trapped them indoors. Faced with the monotony of confinement, one member of the group proposed an ingenious idea to break the boredom: each of them should write a supernatural tale to captivate the others.

Among these five individuals were some notable figures of their time. Lord Byron, the celebrated English poet and his friend and fellow poet, Percy Shelley. Alongside them was Shelley’s wife, Mary, her stepsister Claire Clairmont, who happened to be Byron’s mistress, and Byron’s physician, Dr. Polidori.

Lord Byron, burdened by the legal disputes surrounding his divorce and the financial arrangements for his newborn daughter, Ada, found it impossible to fully engage in the challenge (despite having suggested it). However, both Dr. Polidori and Mary Shelley embraced the task with fervor, creating stories that not only survived the holiday but continue to thrive today. Polidori’s tale would later appear as Vampyre – A Tale, serving as the precursor to many of the modern vampire movies and TV programmes we know today. Mary Shelley’s story, which had come to her in a haunting nightmare that very night, gave birth to the core concept of Frankenstein, published in 1818 as Frankenstein: or, The Modern Prometheus. As Jeanette Winterson asserts in her book 12 Bytes [2], Frankenstein is not just a story about “the world’s most famous monster; it’s a message in a bottle.” We’ll see why this message resounds even more today, later.

First though, we must shift our focus to another side of Lord Byron’s tumultuous life and his divorce settlement with his wife, Anabella Wentworth. In this settlement, Byron expressed his desire to shield his daughter from the allure of poetry—an inclination that suited Anabella perfectly, as one poet in the family was more than sufficient for her. Instead, young Ada received a mathematics tutor, whose duty extended beyond teaching mathematics and included eradicating any poetic inclinations Ada might have inherited. Could this be an early instance of the enforced segregation between the arts and STEM disciplines, I wonder?

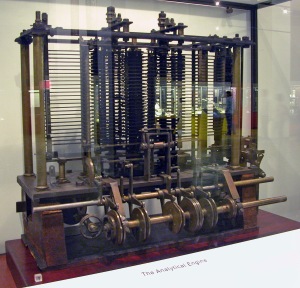

Ada excelled in mathematics, and her exceptional abilities, combined with her family connections, earned her an invitation, at the age of 17, to a London soirée hosted by Charles Babbage, the Lucasian Professor of Mathematics at Cambridge. Within Babbage’s drawing room, Ada encountered a model of his “Difference Engine,” a contraption that so enraptured her, she spent the evening engrossed in conversation with Babbage about its intricacies. Babbage, in turn, was elated to have found someone who shared his enthusiasm for his machine and generously shared his plans with Ada. He later extended an invitation for her to collaborate with him on the successor to the machine, known as the “Analytical Engine”.

This visionary contraption boasted the radical notion of programmability, utilising punched cards like those employed in weaving machines of that era. In 1842, Ada Lovelace (as she had become by then) was tasked with translating a French transcript of one of Babbage’s lectures into English. However, Ada went above and beyond mere translation, infusing the document with her own groundbreaking ideas about Babbage’s computing machine. These contributions proved to be more extensive and profound than the original transcript itself, solidifying Ada Lovelace’s place in history as a pioneer in the realm of computer science and mathematics.

In one of these notes, she wrote an ‘algorithm’ for the Analytical Engine to compute Bernoulli numbers, the first published algorithm (AKA computer program) ever! Although Babbage’s engine was too far ahead of its time and could not be built using current day technology, Ada is still credited as being the world’s first computer programmer. But there is another twist to this story that brings us closer to the present day.

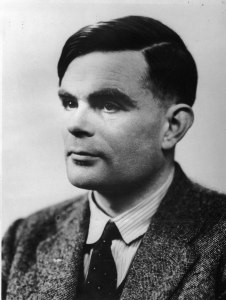

Fast forward to the University of Manchester, 1950. Alan Turing, the now feted but ultimately doomed mathematician who led the team that cracked intercepted, coded messages sent by the German navy in WWII, has just published a paper called Computing Machinery and Intelligence [3]. This was one of the first papers ever written on artificial intelligence (AI) and it opens with the bold premise: “I propose to consider the question, ‘Can machines think?”.

Turing did indeed believe computers would one day (he thought in about 50 years’ time in the year 2000) be able to think and devised his famous “Turing Test” as a way of verifying his proposition. In his paper Turing also felt the need to “refute” arguments he thought might be made against his bold claim, including one made by no other than Ada Lovelace over one hundred years earlier. In the same notes where she wrote the world’s first computer algorithm, Lovelace also said:

“It is desirable to guard against the possibility of exaggerated ideas that might arise as to the powers of the Analytical Engine. The Analytical Engine has no pretensions whatever to originate anything. It can do whatever we know how to order it to perform. It can follow analysis, but it has no power of anticipating any analytical relations or truths”.

Although Lovelace might have been optimistic about the power of the Analytical Engine, should it ever be built, the possibility of it thinking creatively wasn’t one of the things she thought it would excel at.

Turing disputed Lovelace’s view because she could have had no idea of the enormous speed and storage capacity of modern (remember this was 1950) computers, making them a match for that of the human brain, and thus, like the brain, capable of processing their stored information to arrive at sometimes “surprising” conclusions. To quote Turing directly from his paper:

“It is a line of argument we must consider closed, but it is perhaps worth remarking that the appreciation of something as surprising requires as much of a ‘ creative mental act ‘ whether the surprising event originates from a man, a book, a machine or anything else.”

Which brings us bang up to date with the current arguments that are raging about whether systems like ChatGPT, DALL-E or Midjourney are creative or even sentient in some way. Has Turing’s prophesy finally been fulfilled or was Ada Lovelace right all along, computers can never be truly creative because creativity requires not just a reconfiguration of what someone else has made, it requires original thought based on actual human experience?

One undeniable truth prevails in this narrative: Ada was good at working with what she didn’t have. Not only was Babbage unable to build his machine, meaning Lovelace never had one to play with, she also didn’t have male privilege or a formal education – something that was a scarce commodity for women – a stark reminder of the limitations imposed on her gender during that time.

Have things moved on today for women and young girls? A glimpse into the typical composition of a computer science classroom, be it at the secondary or tertiary level, might beg the question: Have we truly evolved beyond the constraints of the past? And if not, why does this gender imbalance persist?

Over the past five or more years there have been many studies and reports published into the problem of too few women entering STEM careers and we seem to be gradually focusing in on not just what the core issues are, but also how to address them. What seems to be lacking is the will, or the funding (or both) to make it happen.

So, what to do, first some facts:

- Girls lose interest in STEM as they get older. A report from Microsoft back in 2018 found that confidence in coding wanes as girls get older, highlighting the need to connect STEM subjects to real-world people and problems by tapping into girls’ desire to be creative [4].

- Girls and young women do not associate STEM jobs with being creative. Most girls and young women describe themselves as being creative and want to pursue a career that helps the world. They do not associate STEM jobs as doing either of these things [4].

- Female students rarely consider a career in technology as their first choice. Only 27% of female students say they would consider a career in technology, compared to 61% of males, and only 3% say it is their first choice [5].

- Most students (male and female) can’t name a famous female working in technology. A lack of female role models is also reinforcing the perception that a technology career isn’t for them. Only 22% of students can name a famous female working in technology. Whereas two thirds can name a famous man [5].

- Female pupils feel STEM subjects, though highly paid, are not ‘for them’. Female Key Stage 4 pupils perceived that studying STEM subjects was potentially a more lucrative choice in terms of employment. However, when compared to male pupils, they enjoyed other subjects (e.g., arts and English) more [6].

The solutions to these issues are now well understood:

- Increasing the number of STEM mentors and role models – including parents – to help build young girls’ confidence that they can succeed in STEM. Girls who are encouraged by their parents are twice as likely to stay in STEM, and in some areas like computer science, dads can have a greater influence on their daughters than mums yet are less likely than mothers to talk to their daughters about STEM.

- Creating inclusive classrooms and workplaces that value female opinions. It’s important to celebrate the stories of women who are in STEM right now, today.

- Providing teachers with more engaging and relatable STEM curriculum, such as 3D and hands-on projects, the kinds of activities that have proven to help keep girls’ interest in STEM over the long haul.

- Multiple interventions, starting early and carrying on throughout school, are important ways of ensuring girls stay connected to STEM subjects. Interventions are ideally done by external people working in STEM who can repeatedly reinforce key messages about the benefits of working in this area. These people should also be able to explain the importance of creativity and how working in STEM can change the world for the better [7].

- Schoolchildren (all genders) should be taught to understand how thinking works, from neuroscience to cultural conditioning; how to observe and interrogate their thought processes; and how and why they might become vulnerable to disinformation and exploitation. Self-awareness could turn out to be the most important topic of all [8].

Before we finish, let’s return to that “message in a bottle” that Mary Shelley sent out to the world over two hundred years ago. As Jeanette Winterson points out:

“Mary Shelley maybe closer to the world that is to become than either Ada Lovelace or Alan Turing. A new kind of life form may not need to be human-like at all and that’s something that is achingly, heartbreakingly, clear in ‘Frankenstein’. The monster was originally designed to be like us. He isn’t and can’t be. Is that the message we need to hear?” [2].

If we are to heed Shelley’s message from the past, the rapidly evolving nature of AI means we need people from as diverse a set of backgrounds as possible. These should include people who can bring constructive criticism to the way technology is developed and who have a deeper understanding of what people really need rather than what they think they want from their tech. Women must become essential players in this. Not just in developing, but also guiding and critiquing the adoption and use of this technology. As Mustafa Suleyman (co-founder of DeepMind) says in his book The Coming Wave [10]:

“Credible critics must be practitioners. Building the right technology, having the practical means to change its course, not just observing and commenting, but actively showing the way, making the change, effecting the necessary actions at source, means critics need to be involved.”

As we move away from the mathematical nature of computing and programming to one driven by so called descriptive programming [9] it is going to be important we include those who are not technical but are creative as well as empathetic to people’s needs and maybe even understand the limits we should place on technology. The four C’s (creativity, critical thinking, collaboration and communications) are skills we all need to be adopting and are ones which women in particular seem to excel at.

On this, Ada Lovelace Day 2023, we should not just celebrate Ada’s achievements all those years ago but also recognize how Ada ignored and fought back against the prejudices and severe restrictions on education that women like her faced. Ada pushed ahead regardless and became a true pioneer and founder of a whole industry that did not actually really get going until over 100 years after her pioneering work. Ada, the world’s first computer programmer, should be the role model par excellence that all girls and young women look to for inspiration, not just today but for years to come.

References

- Mary Shelley, Frankenstein and the Villa Diodati, https://www.bl.uk/romantics-and-victorians/articles/mary-shelley-frankenstein-and-the-villa-diodati

- 12 Bytes – How artificial intelligence will change the way we live and love, Jeanette Winterson, Vintage, 2022.

- Computing Machinery and Intelligence, A. M. Turing, Mind, Vol. 59, No. 236. (October 1950), https://www.cs.mcgill.ca/~dprecup/courses/AI/Materials/turing1950.pdf

- Why do girls lose interest in STEM? New research has some answers — and what we can do about it, Microsoft, 13th March 2018, https://news.microsoft.com/features/why-do-girls-lose-interest-in-stem-new-research-has-some-answers-and-what-we-can-do-about-it/

- Women in Tech- Time to close the gender gap, PwC, https://www.pwc.co.uk/who-we-are/her-tech-talent/time-to-close-the-gender-gap.html

- Attitudes towards STEM subjects by gender at KS4, Department for Education, February 2019, https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/913311/Attitudes_towards_STEM_subjects_by_gender_at_KS4.pdf

- Applying Behavioural Insights to increase female students’ uptake of STEM subjects at A Level, Department for Education, November 2020, https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/938848/Applying_Behavioural_Insights_to_increase_female_students__uptake_of_STEM_subjects_at_A_Level.pdf

- How we can teach children so they survive AI – and cope with whatever comes next, George Monbiot, The Guardian, 8th July 2023, https://www.theguardian.com/commentisfree/2023/jul/08/teach-children-survive-ai

- Prompt Engineering, Microsoft, 23rd May 2023, https://learn.microsoft.com/en-us/semantic-kernel/prompt-engineering/

- The Coming Wave, Mustafa Suleyman, The Bodley Head, 2023.