[Creativity is] the relationship between a human being and the mysteries of inspiration.

Elizabeth Gilbert – Big Magic

Another week and another letter from a group of artificial intelligence (AI) experts and public figures expressing their concern about the risk of AI. This one has really gone mainstream with Channel 4 News here in the UK having it as their lead story on their 7pm broadcast. They even managed to get Max Tegmark as well as Tony Cohn – professor of automated reasoning at the University of Leeds – on the programme to discuss this “risk of extinction”.

Whilst I am really pleased that the risks from AI are finally being discussed we must be careful not to focus too much on the Terminator-like existential threat that some people are predicting if we don’t mitigate against them in some way. There are certainly some scenarios which could lead to an artificial general intelligence (AGI) causing destruction on a large scale but I don’t believe these are imminent and as likely to happen as the death and destruction likely to be caused by pandemics, climate change or nuclear war. Instead, some of the more likely negative impacts of AGI might be:

- The development of new and powerful biochemical weapons by rogue actors as has been shown in a paper in Nature Machine Intelligence published in 2022.

- The development of autonomous drones that, if acquired by terrorists, could become weapons of mass destruction.

- The use of AI to deliver misinformation and deception on a major scale to change peoples behaviour in negative ways causing them to help undemocratic regimes deliver their goals. We have already seen examples of this in the 2016 US presidential election and UK Brexit referendum where Cambridge Analytica were accused of using targeted ad campaigns on Facebook to influence voter behaviour. As scientist and author Gary Marcus says, imagine what the so-called Russian Firehose of Propaganda model, described in a 2016 Rand report, could be doing with large language models like ChatGPT?

It’s worth pointing out that all of the above scenarios do not involve AI’s suddenly deciding themselves they are going to wreak havoc and destruction but would involve humans being somewhere in the loop that initiates such actions.

It’s also worth noting that there are fairly serious rebuttals emerging to the general hysterical fear and paranoia being promulgated by the aforementioned letter. Marc Andreessen for example says that what “AI offers us is the opportunity to profoundly augment human intelligence to make all of these outcomes of intelligence – and many others, from the creation of new medicines to ways to solve climate change to technologies to reach the stars – much, much better from here”.

Whilst it is possible that AI could be used as a force for good is it, as Naomi Klein points out, really going to happen under our current economic system? A system that is built to maximize the extraction of wealth and profit for a small group of hyper-wealthy companies and individuals. Is “AI – far from living up to all those utopian hallucinations – [is] much more likely to become a fearsome tool of further dispossession and despoilation”. I wonder if this topic will be on the agenda for the proposed global AI ‘safety measure’ summit in autumn?

Whilst both sides of this discussion have good valid arguments for and against AI, as discussed in the first of these posts, what I am more interested in is not whether we are about to be wiped out by AI but how we as humans can coexist with this technology. AI is not going to go away because of a letter written by a groups of experts. It may get legislated against but we still need to figure out how we are going to live with artificial intelligence.

In my previous post I discussed whether AI is actually intelligent as measured against Tegmark’s definition of intelligence, namely the: “ability to accomplish complex goals”. This time I want to focus on whether AI machines can actually be creative.

As you might expect, just like with intelligence, there are many, many definitions of creativity. My current favourite is the one by Elizabeth Gilbert quoted above however no discussion on creativity can be had without mentioning the late Ken Robinsons definition: “Creativity is the process of having original ideas that have value”.

In the above short video Robinson notes that imagination is what is distinctive about humanity. Imagination is what enables us to step outside our current space and bring to mind things that are not present to our senses. In other words imagination is what helps us connect our past with the present and even the future. We have, what is quite possibly (or not) the unique ability in all animals that inhabit the earth, to imagine “what if”. But to be creative you do actually have to do something. It’s no good being imaginative if you cannot turn those thoughts into actions that create something new (or at least different) that is of value.

Professor Margaret Ann Boden who is Research Professor of Cognitive Science defines creativity as ”the ability to come up with ideas or artefacts that are new, surprising or valuable.” I would couple this definition with a quote from the marketeer and blogger Seth Godin who, when discussing what architects do, says they “take existing components and assemble them in interesting and important ways”. This too as essential aspect of being creative. Using what others have done and combining these things in different ways.

It’s important to say however that humans don’t just pass ideas around and recombine them – we also occassionally generate new ideas that are entirely left-field through processes we do not understand.

Maybe part of the reason for this is because, as the writer William Deresiewicz says:

AI operates by making high-probability choices: the most likely next word, in the case of written texts. Artists—painters and sculptors, novelists and poets, filmmakers, composers, choreographers—do the opposite. They make low-probability choices. They make choices that are unexpected, strange, that look like mistakes. Sometimes they are mistakes, recognized, in retrospect, as happy accidents. That is what originality is, by definition: a low-probability choice, a choice that has never been made.

William Deresiewicz, Why AI Will Never Rival Human Creativity

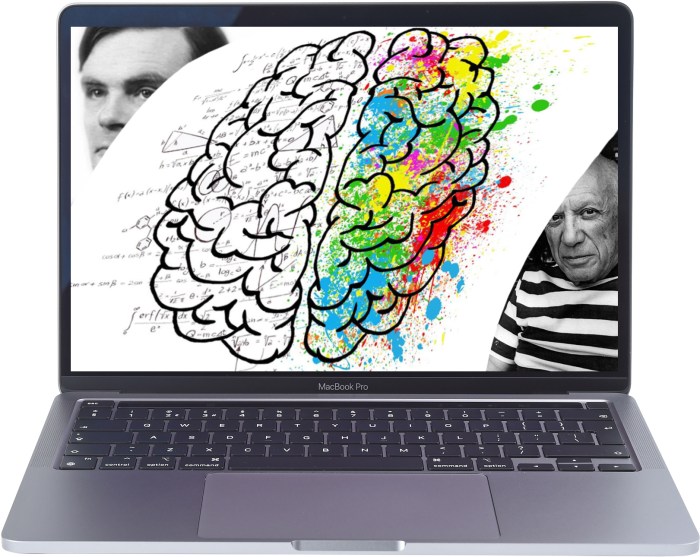

When we think of creativity, most of us associate it to some form of overt artistic pursuit such as painting, composing music, writing fiction, sculpting or photography. The act of being creative is much more than this however. A person can be a creative thinker (and doer) even if they never pick up a paintbrush or a musical instrument or a camera. You are being creative when you decide on a catchy slogan for your product; you are being creative when you pitch your own idea for a small business; and most of all, you are being creative when you are presented with a problem and come up with a unique solution. Referring to the image at the top of my post, who is the most creative – Alan Turing who invented a code breaking machine that historians reckon reduced the length of World War II by at least two years saving millions of lives or Picasso whose painting Guernica expressed his outrage against war?

It is because of these very human reasons on what creativity is that AI will never be truly creative or rival our creativity. True creativity (not just a mashup of someone else’s ideas) only has meaning if it has an injection of human experience, emotion, pain, suffering, call it what you will. When Nick Cave was asked what he thought of ChatGPT’s attempt at writing a song in the style of Nick Cave, he answered this:

Songs arise out of suffering, by which I mean they are predicated upon the complex, internal human struggle of creation and, well, as far as I know, algorithms don’t feel. Data doesn’t suffer. ChatGPT has no inner being, it has been nowhere, it has endured nothing, it has not had the audacity to reach beyond its limitations, and hence it doesn’t have the capacity for a shared transcendent experience, as it has no limitations from which to transcend.

Nick Cave, The Red Hand Files

Imagination, intuition, influence and inspiration (the four I’s of creativity) are all very human characteristics that underpin our creative souls. In a world where having original ideas sets humans apart from machines, thinking creatively is more important than ever and educators have a responsibility to foster, not stifle their students’ creative minds. Unfortunately our current education system is not a great model for doing this. We have a system whose focus is on learning facts and passing exams and which will never allow people to take meaningful jobs that allow them to work alongside machines that do the grunt work whilst allowing them to do what they do best – be CREATIVE. If we don’t do this, the following may well become true:

In tomorrow’s workplace, either the human is telling the robot what to do or the robot is telling the human what to do.

Alec Ross, The Industries of the Future